1. Ingesting and integrating data

Many businesses need automated processes for data ingestion and data integration in realtime. For example, healthcare providers, clinical research, healthcare analytics, agriculture, industrial plants, research organizations, and government agencies generate massive amounts of data that need to be organized, analyzed and leveraged in a timely fashion.

Data managers perform many tasks to transform and organize data and data scientists perform analysis to give researchers and management a view of outcomes, performances or whatever the activities the business entity is concerned with. Data may be temporal, spatial, etc. It can be one time snapshot, short term or long term data. No matter what this data is, it must go through a series of rigorous transformations before it can be used to address a special issue or a multitude of challenges. Some organizations still use manual processes to clean data and make it tidy, transform it and analyze it. These processes are all prone to errors. Hence domain-specific knowledge is required and is critical in many situations when dealing with various types of data.

In this article, we will review some examples where automating data ingestion, data integration, and data analysis may make the difference between life and death, order and chaos.

2. Sensor data ingestion and data integration

The national weather service collects data from many sensors distributed across all states. Examples of data generated by sensors include minimum hourly and daily temperatures, maximal temperatures, relative humidity, etc. Other instruments, aircraft, satellites collect snowfall, snowmelt, snow water equivalent, wind speed and more. Data is received from many locations is ingested and transformed into data products that are used by many agencies and businesses.

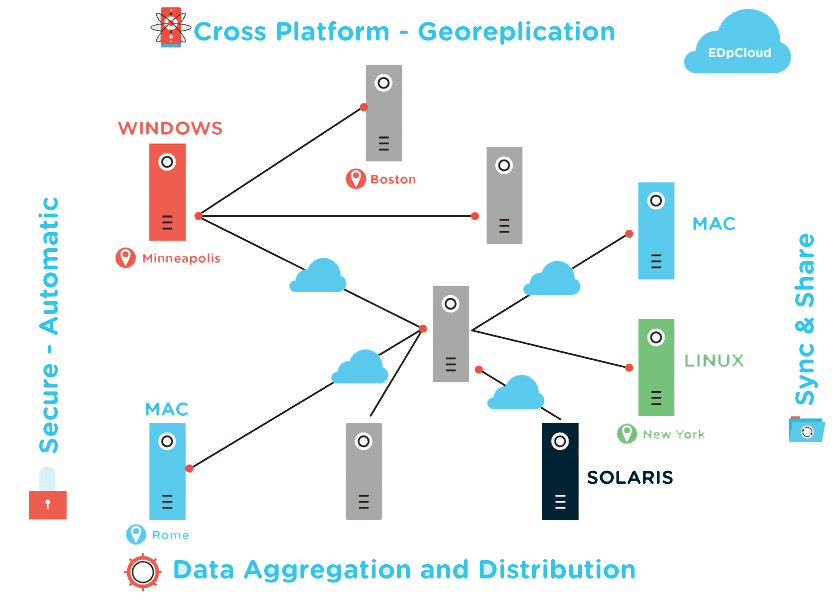

Other data include satellite data and airborne data (case of MIRR). NOAA, NASA, the United States Geological Survey, the Army corps of engineers generate massive amounts of data from different satellites and ground observation stations. Data collected, ingested, supplied and leveraged by these agencies can make the difference between life and death at times and between property protection and property damage(see link above). Insurance companies and underwriters use some of these data as inputs to their risk models and to estimate the future obligations to cover the losses of their customers. It is imperative that data ingestion, data integration, the data analysis, and the data distribution and product and report distributions are automated through distributed real-time and automatic processes that are not prone to errors, are fault-tolerant and timely.

3. Data ingestion and integration: Case of clinical research

Many clinical research organizations conduct studies and trials for organizations in many countries around the globe. Usually, the headquarters and the majority of the scientists are located in the US, Canada or EU countries. The scientists work in close collaboration with doctors, healthcare staff in Africa, India, Asia, and Latin America, to conduct human trials and to bring the drugs to markets. These studies involve human subjects and patients. The results from many of the patients must be delivered using real time data replication and file sync, ingested into data repositories, transformed and analyzed locally and in headquarters. At times, the trials span many countries and the results are critical for the lives of the subjects involved, for future patients as well as for the approval process of the drug and for its success in the market place.

Ingesting and delivering data

Automating data delivery and data analysis in real-time is critical for both the lives of the subjects, for the business of the pharmaceuticals and for the contract research organizations that are conducting the research on patients.

Removing manual interventions and delivering data on time is primordial for the success of the studies. Integrating data from multiple sources and from multiple random trials and ensuring the quality of the data are huge determinants of the speed of discovery. Furthermore, making sure that the research and analysis are reproducible will save lives, money, legal fees as well as the reputations of the scientists, agencies, and businesses.

Read about the case studies with EnduraData.

4. Feeding the data lakes and the data reservoirs

As we have seen with the few examples above, it is critical to automate the entire workflow in order to reduce errors and to deliver products that save lives. The data moves through various data acquisition systems that can involve human expertise or simply data acquisition systems. Some of the data continuously feeds data lakes and data reservoirs where data is ingested to various databases and applications.

Download EnduraData EDpCloud Software and Start synchronizing data in real-time.

Read more (/data-synchronization-software-solutions-for-multiple-operating-systems/).

Please feel free to read more or get more information from EnduraData.

Share this Post